Exploring AI Agents for Consumer Applications

How They Operate, Key Components & Future Applications

In our previous post, we explored the concept of AI agents and their growing role in consumer applications. Now, we’ll dive deeper into how they function, laying the groundwork for building one ourselves and sharing that experience.

What Is an AI agent?

Microsoft CTO Kevin Scott, at Build 2025 Keynote, described an AI agent as:

“is a thing that a human being is able to delegate tasks to. And over time, the tasks that you’re delegating to these agents are becoming increasingly complex.”

What makes this definition particularly interesting is its flexibility. It doesn’t prescribe how an agent must be implemented. This means an agent could take many forms: a software program, a neural network, or even a physical robot. The defining characteristic isn’t its architecture but its ability to take on tasks independently.

OpenAI echoes this idea in its Practical Guide to Building Agents:

“Agents are systems that independently accomplish tasks on your behalf.”

Unlike traditional apps, which require manual input at every step, AI agents interpret, plan, and take action without constant user intervention, unlocking new ways for consumers to interact with technology.

AI Agents in Consumer Applications

AI agents are essentially software designed to help individual users accomplish digital tasks autonomously. Their key advantage over traditional apps is that they use Large Language Models (LLMs) to process natural language and translate requests into actionable steps.

For instance, imagine a travel booking app:

Traditional apps require users to manually enter details and follow a step-by-step process. If a hotel is unavailable, users must start over.

An AI-powered travel assistant, however, can understand requests like: "Find me a beachfront hotel in Valencia next weekend, but only if it has a great pool and free breakfast."

The AI-powered travel assistant would then:

✔ Search multiple sources for available hotels.

✔ Apply user preferences dynamically, filtering out unmatched options.

✔ Handle unexpected issues, like suggesting alternate dates if availability is low.

✔ Book the hotel, arrange transfers, and make dinner reservations—without requiring the user to micromanage each step.

Unlike traditional apps, AI agents adapt to uncertainty and make intelligent decisions, turning a complex request into a seamless travel experience.

Where can AI agents be used?

Where might it make most sense to use an agent? OpenAI in their Practical Guide to Building Agents suggest the following three scenarios which reflect the type of complex and ambiguous scenarios in which agents can make a real difference. The early focus for AI agent technology has been on Enterprise rather than Consumer use cases:

Key Components That Power AI Agents

To function effectively, AI agents rely on several enablers:

1. Memory

The ability to remember previous interactions, so users aren’t asked to repeat the same information. No one likes that!

2. LLMs (Large Language Models)

AI agents can use multiple LLMs depending on the task.

Example: Suppose a user asks: "Plan my work trip to Valencia, making time for sightseeing and restaurant reservations."

The agent might use GPT-4o to understand the request and draft an initial itinerary.

Or it could invoke a reasoning Qwen 3 (developed by Alibaba) to refine the schedule, balancing work and leisure.

Finally, it could use external APIs to book flights, make reservations, and suggest personalized activities.

However, LLMs have a knowledge cutoff established at their time of training (typically between 2024–2025). If an AI agent lacks web-browsing capabilities, it won’t be able to provide up-to-date information. For instance, if asked, "Why did Nvidia’s stock drop yesterday?" it might simply respond, "I don’t know."

3. Supporting Databases

AI agents can pull from specialized data sources to enhance their responses. For example, internal support manuals for a customer service agent. A powerful method for this is Retrieval-Augmented Generation (RAG), which allows AI agents to fetch real-time or domain-specific data before responding.

4. Third-Party Tools and Services

AI agents often need to search the web, process payments, or interact with external systems. These are examples of third-party tools they can leverage.

A key emerging standard here is MCP (Model Context Protocol, developed by Anthropic.

While APIs define how software systems exchange data, MCP helps AI agents understand their tools, memory, and goals making them more efficient in engaging with users.

MCP acts as a bridge between AI agents and external services, ensuring they can communicate effectively and execute complex tasks.

Since MCP is becoming a critical concept in AI agent development, expect to see it referenced frequently.

5. Other Agents

More sophisticated agents will be coordinating a number of more specialised agents in order to achieve their tasks. In that scenario, they will need to manage handoff scenarios. Google’s A2A (Agent2Agent) protocol announced last month is an important new standard in this space. This starts to touch into Agentic AI which we will cover in later posts.

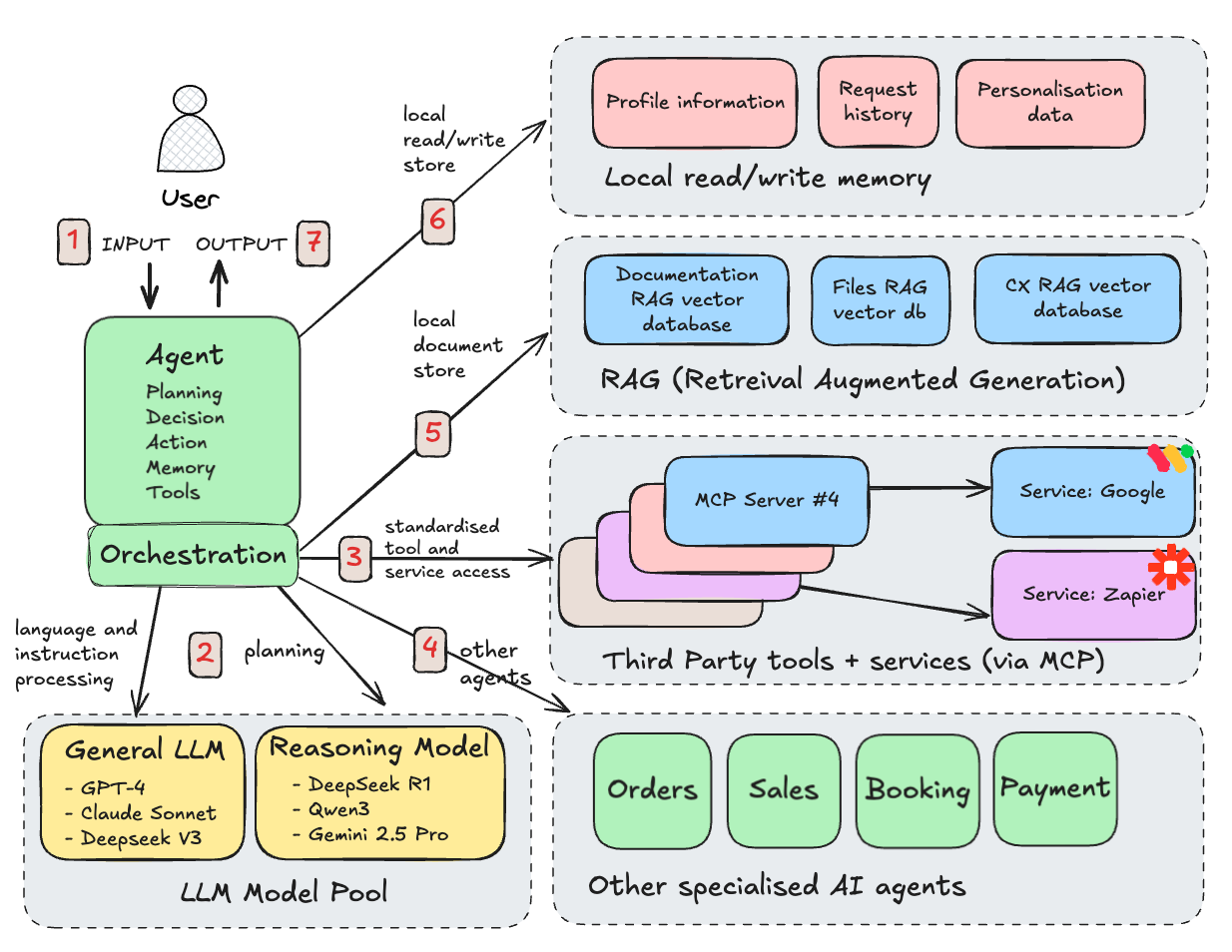

Orchestrating AI Agent Capabilities

Bringing all these elements together - memory, LLMs, data sources, third-party tools - can be quite complex. The real technical challenge is ensuring agents can:

Process input over time.

Recall previously submitted information.

Maintain state and interact with users via notifications.

Seamlessly interface with multiple tools

Handoff to other more specialised agents.

The diagram below is an attempt to illustrate the system architecture of an AI agent.

Let’s walk through a typical scenario. Note the steps link to the corresponding numbered boxes on the diagram:

User Input: The user submits a request via text typically but it could include audio, image, or video.

LLM Processing: The agent may be able to use multiple LLMs to service the request. A general-purpose LLM such as GPT-4o can be used for a basic interpretation of the request. Or a reasoning agent such as o3 used by ChatGPT’s Deep Research could be used to initiate a Chain of Thought (CoT) and break the request task down into a plan with stages. In this case, an agent may ask clarifying questions, as also happens with ChatGPT Deep Research.

External Actions: To complete the task, the agent interacts with third-party tools and services, often leveraging MCP for streamlined communication.

Other Agents: The AI agent may even call upon other more specialised agents to complete its subtasks.

Knowledge Retrieval: If proprietary data is needed (e.g., company manuals), the agent can access supporting databases via RAG (Retrieval Augmented Generation).

Memory: The agent may recall previously entered requests, stored profile information or other information stored during prior operation as additional context for the response.

Final Response: The agent compiles a suitable response and delivers it to the user as output which is typically text but could again include audio, image or video.

The Growing AI Agent Ecosystem

Some companies provide tooling to help manage the orchestration of AI agents, particularly for enterprise use cases.

A UK-based example is Portia AI, which offers an open-source SDK (Software Development Kit) for building AI agents. We met founder Mounir Mouawad recently at an industry event on AI roadmaps. The Portia AI SDK provides a useful way to understand some of the challenges of real-world AI agent development. Sierra AI also offer a high level toolkit that allows companies to build and deploy agents.

In consumer, the leading AI agent-powered apps today are Coding AI platforms, such as Lovable, Cursor, Windsurf, and Replit. While focussed on code generation, these tools are ultimately designed for individual users, helping automate coding workflows and enhance productivity.

Other examples include:

ChatGPT’s Deep Research, which helps users build comprehensive research documents by integrating multiple sources.

Perplexity Labs, which launched this week, is similar. Here are the results of a request to explain AI agent architecture using Perplexity Labs which helps bring to life the earlier AI agent walk through.

Multiple other AI agent-driven propositions such as Harvey, Glean and Abridge highlighted in VC insights, such as Sequoia’s recent AI's Trillion-Dollar Opportunity: Sequoia AI Ascent 2025 Keynote.

In future posts, we’ll explore how to build an agent with Portia’s SDK and share insights from that process as well as some of the Lovable apps we are building for our personal use.

AI Agents: The Road Ahead

AI agents are rapidly evolving, enabling richer consumer experiences and unlocking new possibilities in automation and personalization. However, the ecosystem is still at an early stage. Questions remain about adoption, implementation, and the broader impact on industries.

In a future post, we'll take a hands-on approach to building an AI-powered agent, exploring how these concepts translate into real-world applications.

As always..if you have any questions, insights, or fascinating research to share, we’d love to hear from you!

Acronyms we used

LLM - Large Language Models

MCP - Model Context Protocol

APIs - Application Programming interface

SDK - Software Development Kit

RAG - Retrieval-Augmented Generation

A2A - Agent2Agent protocol

CoT - Chain of Thought

New tools we used

Originality.ai - to check for plagiarism and AI usage. Scan results here. Originality.ai costs $30 to scan ~300k words.

Oh Yeah!